Creating Frame Processor Plugins

Creating a Frame Processor Plugin for iOS

The Frame Processor Plugin API is built to be as extensible as possible, which allows you to create custom Frame Processor Plugins. In this guide we will create a custom Face Detector Plugin which can be used from JS.

iOS Frame Processor Plugins can be written in either Objective-C or Swift.

Automatic setup

Run Vision Camera Plugin Builder CLI,

npx vision-camera-plugin-builder@latest ios

info

The CLI will ask you for the path to project's .xcodeproj file, name of the plugin (e.g. FaceDetectorFrameProcessorPlugin), name of the exposed method (e.g. detectFaces) and language you want to use for plugin development (Objective-C, Objective-C++ or Swift).

For reference see the CLI's docs.

Manual setup

- Objective-C

- Swift

- Open your Project in Xcode

- Create an Objective-C source file, for the Face Detector Plugin this will be called

FaceDetectorFrameProcessorPlugin.m. - Add the following code:

#import <VisionCamera/FrameProcessorPlugin.h>

#import <VisionCamera/FrameProcessorPluginRegistry.h>

#import <VisionCamera/Frame.h>

@interface FaceDetectorFrameProcessorPlugin : FrameProcessorPlugin

@end

@implementation FaceDetectorFrameProcessorPlugin

- (instancetype) initWithProxy:(VisionCameraProxyHolder*)proxy

withOptions:(NSDictionary* _Nullable)options {

self = [super initWithProxy:proxy withOptions:options];

return self;

}

- (id)callback:(Frame*)frame withArguments:(NSDictionary*)arguments {

CMSampleBufferRef buffer = frame.buffer;

UIImageOrientation orientation = frame.orientation;

// code goes here

return nil;

}

VISION_EXPORT_FRAME_PROCESSOR(FaceDetectorFrameProcessorPlugin, detectFaces)

@end

note

The Frame Processor Plugin can be initialized from JavaScript using VisionCameraProxy.initFrameProcessorPlugin("detectFaces").

- Implement your Frame Processing. See the Example Plugin (Objective-C) for reference.

- Open your Project in Xcode

- Create a Swift file, for the Face Detector Plugin this will be

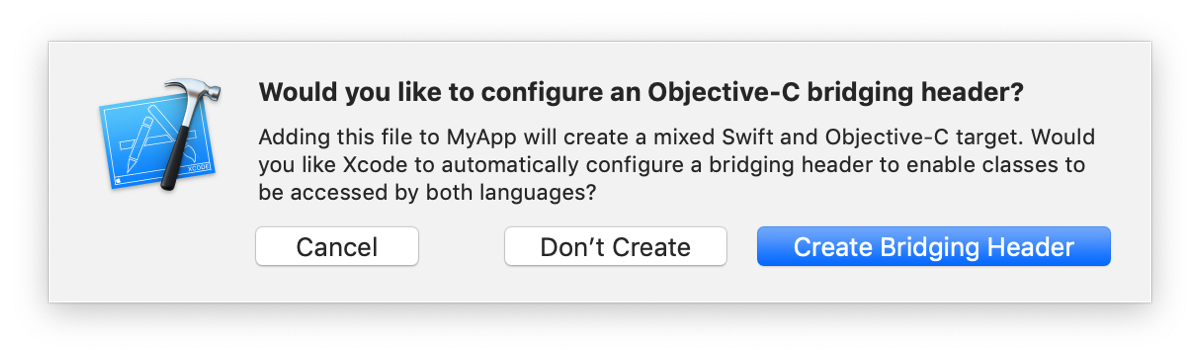

FaceDetectorFrameProcessorPlugin.swift. If Xcode asks you to create a Bridging Header, press create.

- In the Swift file, add the following code:

import VisionCamera

@objc(FaceDetectorFrameProcessorPlugin)

public class FaceDetectorFrameProcessorPlugin: FrameProcessorPlugin {

public override init(proxy: VisionCameraProxyHolder, options: [AnyHashable : Any]! = [:]) {

super.init(proxy: proxy, options: options)

}

public override func callback(_ frame: Frame, withArguments arguments: [AnyHashable : Any]?) -> Any {

let buffer = frame.buffer

let orientation = frame.orientation

// code goes here

return nil

}

}

- Create an Objective-C source file that will be used to automatically register your plugin

#import <VisionCamera/FrameProcessorPlugin.h>

#import <VisionCamera/FrameProcessorPluginRegistry.h>

#import "YOUR_XCODE_PROJECT_NAME-Swift.h" // <--- replace "YOUR_XCODE_PROJECT_NAME" with the actual value of your xcode project name

VISION_EXPORT_SWIFT_FRAME_PROCESSOR(FaceDetectorFrameProcessorPlugin, detectFaces)

- Implement your frame processing. See Example Plugin (Swift) for reference.